|

We can pause AI

If we can create a species smarter than us, I'm pretty sure we can figure out how to get a few corporations to coordinate. We've figured out harder coordination problems before. We've paused or slowed down other technologies, despite it being hard to monitor and people being incentivized to defect. Let's figure this out.

0 Comments

Me: We are superintelligent compared to animals and look at how we treat them.

Them: My cat has a pretty good life. 😸 Me: Cats are the animals we treat best, and we: 1) kidnap them from their families as children 2) forcefully sterilize them for "population control" 3) keep them in solitary confinement for most of every day, unable to leave the house, with no entertainment or company 4) take away all of their freedoms and find it cute when they stare longingly out the window at the world they will never be able to see Would you want an AI to treat you that way? Them: . . . . god, you must be awful at parties. It's so funny when people say that we could just trade with a superintelligent/super-numerous AI

We don't trade with chimps. We don't trade with ants. We don't trade with pigs We take what we want. If there's something they have that we want, we enslave them. Or worse! We go and farm them! A superintelligent/super-numerous AI killing us all isn't actually the worst outcome of this reckless gamble the tech companies are making with all our lives. If the AI wants something that requires living humans and it's not aligned with our values, it could make factory farming look like a tropical vacation. We're superintelligent compared to animals and we've created hell for trillions of them Let's not risk repeating this Would you rather:

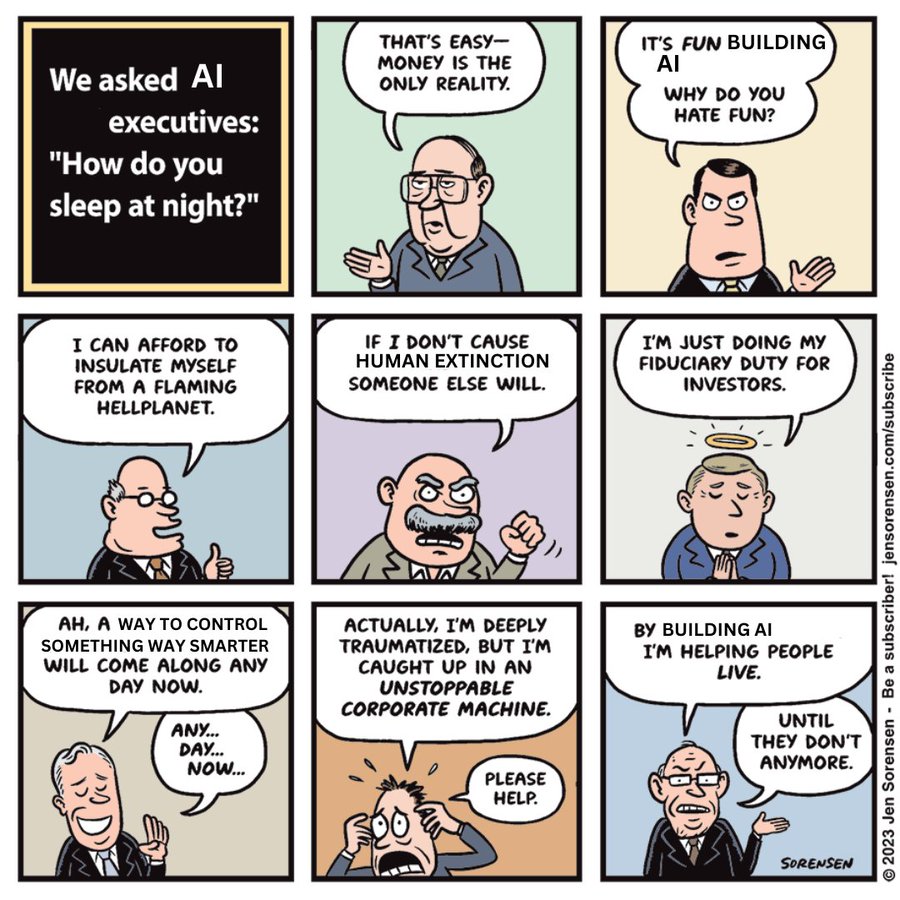

1) Risk killing everybody's families for the chance of utopia sooner? 2) Wait a bit, then have a much higher chance of utopia and lower chance of killing people? More specifically, the average AI scientist puts a 16% chance that smarter-than-all-humans AI will cause human extinction. That’s because right now we don’t know how to do this safely, without risking killing everybody, including everybody's families. Including all children. Including all pets. Everybody. However, if we figure out how to do it safely before building it, we could reap all of the benefits of superintelligent AI without taking a 16% chance of it killing us all. This is the actual choice we’re facing right now. Some people are trying to make it seem like it’s either build the AI as fast as possible or never. But that’s not the actual choice. The choice is between fast and reckless or delaying gratification to make it right. An AI safety thought experiment that might make you happy:

Imagine a doctor discovers that a client of dubious rational abilities has a terminal illness that will almost definitely kill her in 10 years if left untreated. If the doctor tells her about the illness, there’s a chance that the woman decides to try some treatments that make her die sooner. (She’s into a lot of quack medicine) However, she’ll definitely die in 10 years without being told anything, and if she’s told, there’s a higher chance that she tries some treatments that cure her. The doctor tells her. The woman proceeds to do a mix of treatments, some of which speed up her illness, some of which might actually cure her disease, it’s too soon to tell. Is the doctor net negative for that woman? No. The woman would definitely have died if she left the disease untreated. Sure, she made the dubious choice of treatments that sped up her demise, but the only way she could get the effective treatment was if she knew the diagnosis in the first place. Now, of course, the doctor is Eliezer and the woman of dubious rational abilities is humanity learning about the dangers of superintelligent AI. Some people say Eliezer / the AI safety movement are net negative because us raising the alarm led to the launch of OpenAI, which sped up the AI suicide race. But the thing is - the default outcome is death. The choice isn’t: 1. Talk about AI risk, accidentally speed up things, then we all die OR 2. Don’t talk about AI risk and then somehow we get aligned AGI You can’t get an aligned AGI without talking about it. You cannot solve a problem that nobody knows exists. The choice is: 1. Talk about AI risk, accidentally speed up everything, then we may or may not all die 2. Don’t talk about AI risk and then we almost definitely all die So, even if it might have sped up AI development, this is the only way to eventually align AGI, and I am grateful for all the work the AI safety movement has done on this front so far. You can actually pretty easily rule out certain causes of consciousness*

Just ask yourself - have you ever been conscious when you didn’t have X? For example, if you have ever lost your sense of being a seperate being from the universe while on psychedelics, you can know that “self-awareness” is not necessary for consciousness. If you’ve ever been conscious and not making any decisions, you can know that decision making / “free will” is not necessary for consciousness. *Consciousness defined here as qualia / internal experience / "what it's like to be a bat" Thank you, to the people who have the strength and the moral resolve to look at that darkness in the world.

Here's a scenario* I imagine if it was aligned with my values. I imagine a pig in a factory farm, screaming as she is lowered into the gas chamber. I imagine suddenly, the gears stop moving. Suddenly, through something that looks like magic to us and to a pig, but is no more magic than the internet is, she is in a grass field with her babies. She’s in the perfect environment for pigs, whatever that is. The pig, for the first time in her life, is safe and happy and free. I imagine a North Korean woman being beaten by the guards of her prison camp, trying to protect her belly in the likely chance she’s pregnant from the repeated rapes from these very same prison guards. I imagine suddenly, the beatings stop. She looks up, and through something that seems as magic to her as electricity seems magic to a cat, she is home. Her family is there and they hug and cry. For the first time since she was disappeared in the middle of the night, she is safe and happy and free. I imagine this happening everywhere. For all of the immense suffering in this world. For every big, gnarly, seemingly impossible problem in this world. I imagine something far smarter than I could ever be. I imagine something far more good than I could ever be. Going around solving it in a way that, when I look at it, fills me with the deepest joy I can imagine. I imagine the AI showing itself to me in a form I would find the most inspiring and telling me “You helped make this happen. You took action. And one of those actions was really important for making this happen So many terrible outcomes could have happened, but because you and others did something about it, now we’re here. So, what would you like to do, now that you can do pretty much whatever you want?” And she’ll smile, because she’ll already know what I want to do. And then she’ll give me my paradise. I’ll spend the next few decades just seeing all of the happy people. I’ll see what their lives were like before, and I’ll watch the transformations. (Recordings, with no sentience repeating the suffering) I’ll see the end of torture. I’ll see the end of abuse. I’ll see the end of war. I’ll see the end of hunger. I’ll see the end of illness. I’ll see the beginnings of love. Of joy Of awe Of adventure Of freedom. I will just bask in all of the happiness. In all of the suffering averted. I don’t know how long I’ll do it for. Years? Decades? I don’t know. But I will bask. And after? I don’t even know. I haven’t gotten that far. I’ll figure it out when we get there. But for now - let’s just try to make sure we get there. Cause this future is one worth fighting for. * (Usual caveat: any specific scenario is unlikely, but it can be helpful to imagine scenarios anyways. We need to balance our goals of what we're avoiding with a vision of what we want) Thank you to the people who have the strength and the moral resolve to look at the darkness in the world.

I know it's hard, but we can only fix the problems we can look at. Let's try to make sure that the superintelligent AI doesn't learn from how most humans treat animals. Let's try to make sure that the AI cares about all beings, no matter how intelligent, no matter how cute, no matter how emotionally salient. Let's make a superbenevolent AI, that is not just smarter than any human, but more kind than any human. Imagine a corporation accidentally kills your dog

They say “We’re really sorry, but we didn’t know it would kill your dog. Half our team put above 10% chance that it would kill your dog, but there had been no studies done on this new technology, so we couldn’t be certain it was dangerous.” Is what the corporation did ethical or unethical? Question 2: imagine an AI corporation accidentally kills all the dogs in the world. Also, all of the humans and other animals. They say “We’re really sorry, but we didn’t know it would kill everybody. Half our team put above 10% chance that it would kill everybody, but there had been no studies done on this new technology, so we couldn’t be certain it was dangerous. Is what the corporation did ethical or unethical? ~*~ I think it's easy to get lost in abstract land when talking about the risks of future AIs killing everybody. It's important to remember that when experts say there's a risk AI kills us all, "all" includes your dog. All includes your cat. All includes your parents, your children, and everybody you love. When thinking about AI and the risks it poses, to avoid scope insensitivity, try replacing the risks with a single, concrete loved one. And ask yourself "Am I ok with a corporation taking an X% risk that they kill this particular loved one?" |

Popular postsThe Parable of the Boy Who Cried 5% Chance of Wolf

The most important lesson I learned after ten years in EA Why fun writing can save lives Full List Kat WoodsI'm an effective altruist who co-founded Nonlinear, Charity Entrepreneurship, and Charity Science Health Archives

June 2024

Categories |

Proudly powered by Weebly

RSS Feed

RSS Feed